The Right—and Wrong—Lessons of the Iraq War

“The whole horrible truth about the war is being revealed,” wrote the theologian Reinhold Niebuhr in 1923, just five years after World War I had ended. “Every new book destroys some further illusion. How can we ever again believe anything?” Americans had once hoped that the Great War would make the world safe for democracy. But by the 1920s, a darker interpretation held sway. Revisionist scholars argued that the Allies were just as responsible for starting the war as the Germans were. They contended that the conflict had simply empowered one set of voracious empires at the expense of another. Most damningly, they claimed that Washington’s war was rooted in avarice and lies—that the United States had been dragged into an unnecessary conflict by financiers, arms manufacturers, and foreign interests. “The moral pretensions of the heroes,” Niebuhr went on, “were bogus.”

In fact, the supposed revelations about World War I were not quite what they seemed. Although the origins of the conflict are endlessly contested, they were grounded primarily in the tensions created by a powerful, provocative Germany. Corporate greed did not drive Washington to war. Instead, issues such as freedom of the seas and outrage at German atrocities spurred it to enter the fight. American intervention was hardly fruitless: it helped turn the tide on the Western front and prevented Germany from consolidating a continental empire from the North Sea to the Caucasus. But amid the disillusion sown by a bloody war and an imperfect peace, more cynical interpretations flourished—and indelibly influenced U.S. policy.

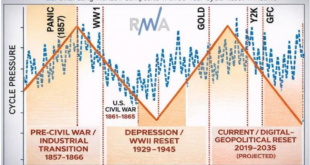

In the 1920s, jaded views of the last war informed the United States’ decision to reject strategic commitments in Europe. In the 1930s, concerns that “merchants of death” had pulled the country into war encouraged the passage of strict neutrality laws meant to keep it out of future conflicts. Leading isolationists, such as Charles Lindbergh and Father Charles Coughlin, channeled this warped interpretation of World War I when they argued that selfish minorities and shadowy interests were once again scheming to draw the United States into war. These dynamics made it harder for the United States to do much more than watch as the global order collapsed. Views of wars past invariably shape how the United States approaches threats present. In the interwar era, historical revisionism in the world’s leading democracy abetted geopolitical revisionism by totalitarian predators.

When seemingly good wars go bad, Americans often conclude that those wars were pointless or corrupt from the get-go. Since the U.S. invasion of Iraq, in 2003, many observers have viewed that conflict as Niebuhr once viewed World War I. There is now a bipartisan consensus that the war was a terrible mistake based on faulty premises, which indeed it was. But many critics go further, peddling what can only be called conspiracy theories: that the war was the work of a powerful pro-Israel lobby or a nefarious cabal of neoconservatives, that President George W. Bush deliberately lied in order to sell a conflict he was eager to wage, or that the United States intervened out of lust for oil or other hidden motives.

These are not the rantings of a lunatic fringe. In 2002, in the run-up to the invasion, Barack Obama, then a state senator in Illinois, labeled the coming conflict a “dumb war” motivated by the Bush administration’s bid to “distract” Americans from economic problems and “corporate scandals.” As president, Donald Trump called the invasion the “single worst decision ever made,” blaming greedy defense contractors and trigger-happy generals for U.S. military misadventures in the Middle East. Other critics have offered more judicious explanations of the war’s origins. But in many quarters, “Iraq” is still synonymous with deception and bad faith.

Twenty years after the U.S. invasion, the passage of time has not obscured the fact that the war was a tragedy that took a heavy toll on the United States and an even heavier toll on Iraq. If World War I was, in retrospect, a war whose benefits surely outweighed its costs for the United States, the Iraq war was one Washington should never have fought. But as Melvyn Leffler shows in a new book, Confronting Saddam Hussein, the war was an understandable tragedy, born of honorable motives and genuine concerns. One might add that it was an American tragedy: the war was not the work of any cunning faction but initially enjoyed broad-based, bipartisan support. Finally, Iraq was an ironic tragedy: the U.S. failure in a war often portrayed as the epitome of American hubris was ultimately a result of, first, too much intervention and, then, too little.

The United States will not have a healthy foreign policy until it properly understands its sad, complex saga in Iraq. A generation after the march on Baghdad, not the least of the lingering challenges of the Iraq war is getting its history, and thus its lessons, right.

MISSION NOT ACCOMPLISHED

No serious observer can dispute one early judgment on Iraq: it was a debacle. The Bush administration decided to confront Saddam Hussein in 2002–3 to eliminate what it viewed as a growing and, after 9/11, intolerable threat to American security. The aim was to topple a brutal tyranny that was a fount of aggression and instability in the Middle East through a brief, low-cost intervention. Very little went according to plan.

Victory over Saddam’s regime gave way to raging insurgency and gruesome civil war. The military and economic costs spiraled upward. Between 2003 and 2011, over 4,000 U.S. service members were killed in operations tied to Iraq and over 31,000 wounded. As for the number of Iraqis killed, no one knows for sure, but researchers have estimated a death toll between 100,000 and 400,000 over the same period. Meanwhile, the credibility of the war effort crumbled when Saddam’s suspected weapons of mass destruction stockpiles turned out mostly not to exist. The U.S. reputation for competence suffered from the deficient planning and serial misjudgments—the failure to adequately prepare for a vacuum of authority after Saddam’s fall, the deployment of too few troops to stabilize the country, the ill-advised disbanding of the Iraqi army, and many others—that marred the subsequent occupation and fueled the ensuing chaos. And rather than strengthening the U.S. geopolitical position, the conflict weakened it almost everywhere.

The war intensified a sectarian maelstrom in Iraq and throughout the Middle East while freeing a theocratic Iran to expand its influence. By turning Iraq into a cauldron of violence, the invasion revived al Qaeda and the broader jihadist movement that had been pummeled after 9/11. Foreign fighters flocked to Iraq for the chance to kill American soldiers. Once there, they created new terrorist networks and gained valuable battlefield experience. The war also caused a painful rift with key European allies; it consumed American energies that might have been applied to other problems, from North Korea’s nuclear program to Russian revanchism and the rise of China.

Yet critiques of the war have become so hyperbolic that it can be difficult to keep the damage in perspective. One prominent commentator, David Kilcullen, even deemed the war comparable to Hitler’s doomed invasion of the Soviet Union. True, the human toll was devastating, but for the U.S. military, it amounted to roughly one-quarter of the deaths that American forces suffered in the bloodiest year of the Vietnam War. Once U.S. forces belatedly got a grip on the insurgency in 2007–8, Iraq became a death trap for the jihadists that had flocked there. Much of the worst damage to U.S. alliances had been patched up by Bush’s second term or simply overtaken by new challenges. By 2013, with U.S. troops gone from Iraq, the Middle East blunder that most preoccupied many European countries was Obama’s decision not to intervene in Syria after Bashar al-Assad used chemical weapons against his own people. Overall, the Iraq war dented American power but hardly destroyed it. In the early 2020s, the United States is still the world’s preeminent economic and military actor, and it has more trouble keeping countries out of its unparalleled alliance network than bringing them in.

Where the war did leave a lasting mark was on the American psyche. A taxing, long-mismanaged intervention undermined domestic confidence in U.S. power and leadership. It elicited calls for retrenchment not simply from Iraq or the Middle East but also from the world. By 2014, the percentage of Americans saying that the United States should “stay out of world affairs” was higher than at any time since polling on that question began. By 2016, the year the country elected a president who revived the isolationist slogan “America First,” 57 percent of respondents in a Pew Research Center survey agreed that Washington should mind its own business. This Iraq hangover was all the more painful because it made the United States strategically sluggish just as the dangers posed by great-power rivals were growing. If Vietnam had, as Henry Kissinger put it in his memoirs, “stimulated an attack on our entire postwar foreign policy,” here, history was indeed repeating itself.

STEPPING BACK IN TIME

How the United States got into this mess is the subject of Confronting Saddam Hussein. No one is better suited to answer the question than Leffler, a widely admired diplomatic historian. His landmark study of the early Cold War, A Preponderance of Power, is a model of how to criticize policymakers’ errors while recognizing their achievements and comprehending the excruciating pressures they felt. Good history demands empathy—seeing the world through the eyes of one’s subjects even when one disagrees with them—and Leffler’s work is suffused with it.

Confronting Saddam Hussein is the most serious scholarly study of the war’s origins, relying on interviews with key policymakers and the limited archival material that has been declassified. Leffler aims to understand, not condemn. His thesis is that the Iraq war was a tragedy, but one that cannot be explained by conspiracy theories or allegations of bad faith.

As Leffler demonstrates, before 9/11, U.S. officials believed the problem posed by an unrepentant, malign Iraq was getting worse, but they showed little urgency in addressing it. After 9/11, long-standing concerns about Saddam’s weapons programs, his ties to terrorists, and his penchant for aggression intersected with newer fears that failing to deal with festering problems, particularly those combining weapons of mass destruction and terrorism, could have catastrophic consequences. Amid palpable insecurity, Bush brought matters to a head, first by threatening war in a bid to make Saddam verifiably disarm, and then—after concluding this coercive diplomacy had failed—by invading. “Fear, power, and hubris,” Leffler writes, produced the Iraq war: fear that Washington could no longer ignore simmering dangers, the power that an unrivaled United States could use to deal with such dangers decisively, the hubris that led Bush to think the undertaking could be accomplished quickly and cheaply.

Leffler’s book is no whitewash. The bureaucratic dysfunction that impeded searching debate before the invasion and competent execution thereafter is on display. So is the failure to scrutinize sketchy intelligence and flawed assumptions. The sense of purpose that motivated Bush after 9/11, combined with his visceral antipathy to Saddam—who was, after all, one of the great malefactors of the modern age—brought moral clarity, as well as strategic myopia. Bush and his close ally, British Prime Minister Tony Blair, “detested Saddam Hussein,” and “their view of his defiance, treachery, and barbarity” powerfully shaped their policies, Leffler notes. But none of this criticism is news in 2023, so Leffler’s real contribution is in exploding pernicious myths about the conflict’s origins.

CORRECTING THE RECORD

One myth is that Iraq was effectively contained circa 2001, so the ensuing invasion addressed an imaginary challenge. In truth, the Iraq problem—how to handle a regime that Washington had defeated in the Gulf War of 1990–91 but remained a menace to international stability—seemed all too real. Saddam had kicked out UN weapons inspectors in 1998; as the accompanying sanctions regime eroded, Iraq increased funding for its Military-Industrial Commission fortyfold. The regime cultivated myriad terrorist groups, in the Palestinian territories, Egypt, and other Middle Eastern countries. Saddam had secretly destroyed his stockpiles of chemical and biological weapons but not the infrastructure for developing them. The decadelong effort to contain Saddam was draining U.S. resources, while the supporting U.S. military presence in Saudi Arabia became an al Qaeda recruiting bonanza. The U.S. global image also suffered from exaggerated claims about the damage economic sanctions were inflicting on Iraqi citizens.

Saddam posed a growing threat, if not an imminent one. This is why, as the political scientist Frank Harvey has demonstrated, any U.S. administration would have felt pressure to resolve the Iraq problem after 9/11. It is also why any responsible critique of the war has to take seriously the perils of not removing Saddam from power—for example, the prospect that he eventually might have used force against his neighbors again or that his ambitions might have interacted explosively with those of a nuclearizing Iran.

Leffler also rebuts the “rush to war” thesis, which holds that Bush had been itching to invade Iraq before 9/11. No senior policymaker was then envisioning anything like a full-on invasion, and Bush’s attention was elsewhere. Even Deputy Defense Secretary Paul Wolfowitz, who favored a long-term effort to remove Saddam’s regime, “was not supporting a military invasion, or the deployment of U.S. ground forces” in early 2001, Leffler writes. After 9/11 dramatically increased U.S. sensitivity to all threats, Bush gradually became convinced of the need to confront Saddam, but it was only in early 2003—after Iraq continued its cat-and-mouse game with inspectors readmitted because of U.S. pressure—that he reluctantly concluded that war was inevitable.

The Iraq war was not foisted on the United States by conflict-craving zealots.

Nor was the war the brainchild of powerful neoconservatives bent on a radical democracy-promotion agenda. In truth, those nearest the center of decision—Secretary of Defense Donald Rumsfeld, Vice President Dick Cheney, and especially Bush himself, who rightly emerges from Leffler’s book as the key actor—were hardly neoconservatives. Rumsfeld and Cheney might be better described as conservative nationalists. Bush himself had campaigned against nation-building missions and called for a “humble” foreign policy when running for president. Officials closer to the neoconservative movement, such as Wolfowitz, had little influence on Iraq policy. When Wolfowitz sought to focus the administration on Iraq just after 9/11, “Bush shunted his advice aside,” Leffler writes. There is no evidence, he argues, that Wolfowitz significantly influenced Bush’s view of the issue. The depiction of “an inattentive chief executive, easily manipulated by neoconservative advisers,” adds Leffler, is simply wrong. True, the idea that democratizing Iraq would have a constructive regional effect was a reinforcing motive for war, and one that Leffler underplays. But Bush didn’t pursue democracy promotion because the neocons wanted it—he did so because the traditional U.S. strategy for defanging defeated tyrannies is to turn them into pacific democracies.

Of course, the challenge Iraq posed was less severe than Bush believed because Saddam had quietly divested himself of chemical and biological weapons stockpiles in the mid-1990s. Yet the “Bush lied, people died” critique falls flat: as Leffler shows, every major U.S. policymaker sincerely believed that Saddam’s weapons programs were more advanced than they were because this was the consensus of the intelligence community. (Moreover, the stockpiles were not totally nonexistent, although they were far smaller and less potent than the intelligence community believed. U.S. forces in Iraq ultimately discovered roughly 5,000 chemical warheads, shells, and bombs, all made before 1991.) As two official investigations concluded, the intelligence was flawed because of bad analysis—and Saddam’s effort to deter his enemies by pretending to possess weapons he did not have—rather than deliberate politicization. Bush and his aides were overzealous in presenting the available evidence, but they were not lying.

Nor did they need to. What is often forgotten now is just how popular a more assertive Iraq policy was. During Bill Clinton’s presidency, the Iraq Liberation Act, which made it U.S. policy “to support efforts to remove the regime headed by Saddam Hussein,” passed Congress in 1998 with overwhelming support. In 2002, the authorization for war won 77 votes in the Senate and 296 in the House. “We have no choice but to eliminate the threat,” Senator Joe Biden declared at the time. “This is a guy who is an extreme danger to the world.” The Iraq war was not foisted on the country by ideological cliques or conflict-craving zealots. It was a war the United States chose in an atmosphere of great fear and imperfect information—and one that, for all its horrors, might have yielded a winning outcome after all.

AMERICA WALKS AWAY

War, the French statesman Georges Clemenceau remarked, is a series of catastrophes that results in a victory. Indeed, if the invasion of Iraq was a mistake, that does not mean the war was lost from that point on. Leffler’s account ends with the botched handling of the initial occupation. But after three years of catastrophe, in late 2006, the Bush administration finally came to grips with the chaos engulfing Iraq, fashioning a new counterinsurgency strategy and supporting it with the deployment of roughly 30,000 badly needed troops.

As detailed empirical work by the scholars Stephen Biddle, Jeffrey Friedman, and Jacob Shapiro demonstrates, this “surge” provided security in key areas and bolstered an uprising of Sunni tribes against jihadists that had overrun their communities. Violence plummeted; al Qaeda in Iraq was brought to the verge of defeat. There was political progress, with the emergence of cross-sectarian electoral coalitions. Had events stayed on this trajectory, they might have resulted in an Iraq that was a relatively stable, democratic, and reliable potential U.S. partner in the broader war on terror; Americans might now view the conflict as a costly victory rather than a costly defeat.

Yet sustaining that trajectory would have required sustaining the U.S. presence in Iraq. Bush’s successor had made his name opposing the war, had long argued that the conflict was lost, and had campaigned on a promise to end it, in part so his administration could focus on the “necessary war” in Afghanistan. Once in office, Obama did not immediately withdraw U.S. forces from Iraq. But after the failure of a desultory effort to negotiate an agreement that would keep a modest stabilization force there beyond 2011, U.S. personnel withdrew in December of that year. Even before that, the Obama administration had pulled back from intensive, hands-on management of Iraq’s complex political scene.

The conviction that Iraq was a dumb war helped deprive Washington of a chance to win it.

The diplomatic and legal intricacies of the episode were considerable, but studies by journalists, scholars, and participants show that Obama probably could have had a longer-term U.S. presence had he wanted one. Withdrawal had devastating consequences. The pullout removed shock absorbers between Iraqi political factions and left Prime Minister Nouri al-Maliki free to indulge his most sectarian instincts. It helped a nearly vanquished al Qaeda in Iraq reemerge as the Islamic State (also known as ISIS) while denying Washington the intelligence footprint that would have provided greater early warning of the threat. It ultimately contributed to a catastrophic collapse of Iraqi security and a terrorist rampage across a third of the country, which led to another U.S. military intervention and caused many of the same baleful consequences—distraction from other priorities, revitalization of the global jihadist movement, increased Iranian influence in Iraq, global doubts about Washington’s competence and judgment—that Obama rightly argued Bush’s war had caused.

As ISIS advanced to within an hour’s drive from Baghdad in 2014, another angry debate erupted over whether the U.S. withdrawal was to blame. It is impossible to say with certainty, and even an educated guess depends on the size and composition of the force one assumes the United States would have left behind. Yet it seems likely that a force of 10,000 to 20,000 troops (the number that U.S. and Iraqi officials considered plausible when negotiations began), combined with greater political engagement to dampen sectarian tensions after Iraq’s disputed elections in 2010, would have had several constructive effects. It would have shored up Iraqi capabilities, buoyed the self-confidence of Iraqi forces, mitigated the politicization of the country’s elite Counter Terrorism Service, and provided a combination of reassurance and leverage in dealing with the difficult Maliki. If nothing else, a U.S. presence of that size would have given Washington the ability and foreknowledge required to carry out counterterrorism strikes before ISIS had gained critical momentum.

What is undoubtedly true is that by pulling back from Iraq, militarily and diplomatically, the United States lost its ability to preserve the fragile but hopeful trends that had emerged there. The conviction that Iraq was a dumb war, a lost war, helped deprive the United States of a chance to win it.

IRAQ’S LONG SHADOW

What lessons should the United States draw from its Iraq saga? Obama offered the pithiest answer: “Don’t do stupid shit.” Washington should avoid wars of regime change and occupation, limit military involvement in the Middle East, and accept that hard problems must be managed rather than solved. That is the same message conveyed, less colorfully, in the Biden administration’s National Security Strategy, released in October 2022.

At first glance, who can argue? The Iraq war shows the difficulties associated with toppling hostile regimes and implanting democratic alternatives. The complexities of such missions are often greater, and the price higher, than they initially seem. Indeed, the arc of U.S. involvement in Iraq—invading the country, then underinvesting in its stabilization, then withdrawing prematurely after things had turned around—may show that these missions require a mix of patience and commitment that even a superpower struggles to muster.

The trouble is that the same maxim, if applied in earlier eras, would have precluded some of the United States’ greatest foreign policy successes, such as the post–World War II transformation of Japan and Germany. In the same vein, a long-term nation-building program, underpinned by U.S. troops, helped produce the South Korean miracle; post–Cold War interventions in Panama and the Balkans succeeded far more than they failed. Ambitious military campaigns do not always end in sorrow. Some have backfired; some have helped produce the remarkably vibrant, democratic world we inhabit today.

The conviction that Iraq was a dumb war helped deprive Washington of a chance to win it.

The “no more Iraqs” mindset carries other dangers, as well. In an ideal world, Washington would surely love to abandon an unstable Middle East. Yet it cannot because it still has important interests there, from counterterrorism to ensuring the smooth functioning of the global energy market. A stubborn resistance to Middle Eastern wars might help avoid future quagmires. Or as Obama discovered, it might lead to episodes in which violent upheaval builds, U.S. interests are threatened, and Washington intervenes later, from a worse position and at a higher price.

The truth is that stupid comes in many flavors. It includes unwise interventions and hasty withdrawals, too little assertiveness as well as too much. If the Iraq war teaches anything, it is that U.S. strategy is often a balancing act between underreach and overreach and that there is no single formula that can allow the United States to avoid one danger without courting the other.

The war also teaches the importance of learning and adapting after initial mistakes. It is not unusual in the U.S. experience: the real American way of war is to start slowly and make lots of deadly errors. When debacles turn into victories, as was the case in the U.S. Civil War, both world wars, and many other conflicts, it is because Washington eventually masters a steeper learning curve than the adversary while gradually bringing its tremendous might to bear. The nice thing about being a superpower is that even the most tragic and harmful blunders are rarely fatal. How one recovers from mistakes that inevitably occur in war thus matters a great deal.

But learning any lessons from Iraq requires taking the messy history of that war seriously. Accusations that neoconservatives, the foreign policy “blob,” or the Israel lobby are to blame for American misadventures are echoes of the charges that bankers, merchants, and the British drew Washington into World War I. These arguments may be ideologically convenient, but they do not reveal much about why the United States behaves as it does—and how intelligent, well-meaning policymakers sometimes go so badly astray. Good history offers no guarantee that the United States will get the next set of national security decisions right. But bad history surely increases the odds of getting them wrong.

Eurasia Press & News

Eurasia Press & News